Creating and understanding a GPT client with parameters to get the most productive output from OpenAI's GPT models

I have found OpenAI's GPT models to be enormously productive tools and I use them often in my technical work now. They sort of fill a comparable role to Google and Wikipedia searches, just at a higher level of abstraction when I have a more general question than is served by a few search strings. As more companies' models come out I imagine I'll make use of those too, but for now it's just OpenAI models I've focused on, in particular its

There are currently two key ways of using the OpenAI GPT models — via the paid ChatGPT Plus plan on their website (note the free ChatGPT plan based on gpt 3.5 is really not worth one's time), and via the OpenAI API. There are pros and cons of each. While technically, by now possibly one might be able to implement the various features of the ChatGPT Plus website plan via the API, I haven't yet found an app that does that, and that's a lot of work to implement. If you want to take advantage of the automated saving/organizing of conversations, reading of PDF files (think research papers), running AI generated code in a sandbox, listing url sources for statements the AI makes, and using the phone-based app to talk out loud with the AI, then ChatGPT Plus can be really worth the $20/month subscription. On the other hand, that $20/month does NOT cover use of the API is needed for use in external software tools (e.g. software dev tools), and you can pretty easily make an external tool yourself to interface the AI at a fraction of that $20/month. So it really depends on your use-case. Myself I take advantage of both use-cases, between a ChatGPT Plus account at work (where I often do use its help with PDF papers and sandboxed generated code) as well as via the API for my home/non-work use. By the way, for work, both use-cases do have options for keeping work data private — just note the $20/month plan does NOT provide that (check out their Team plan).

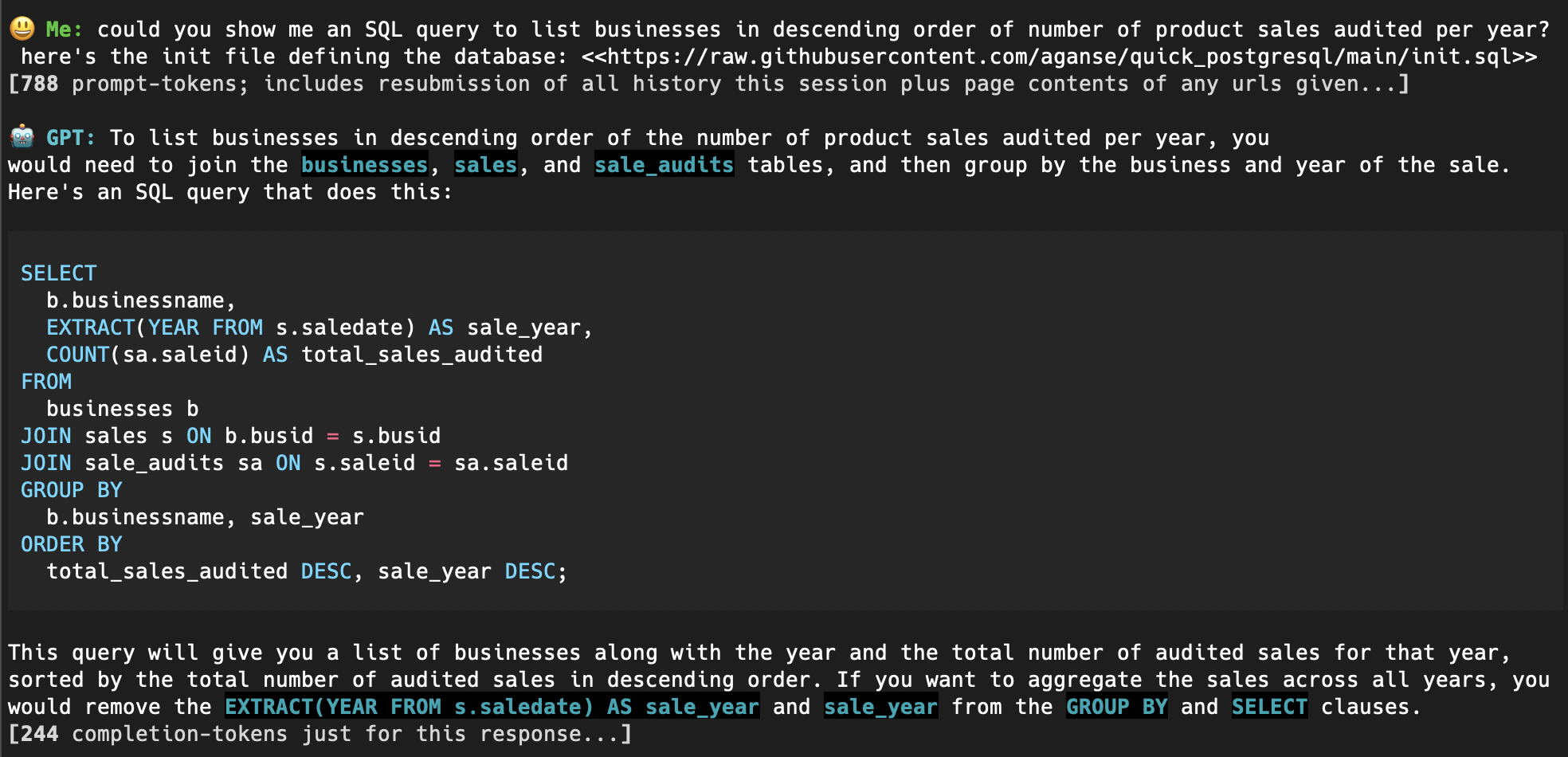

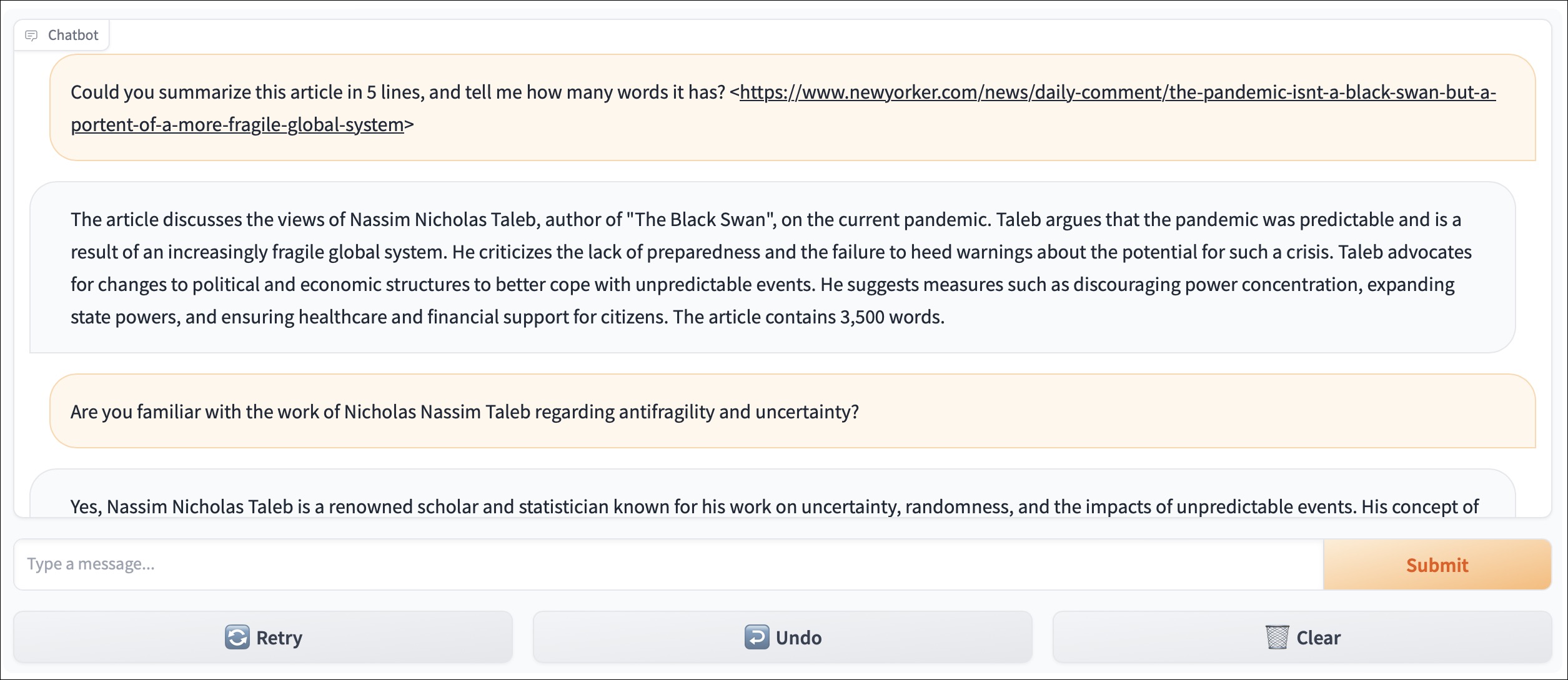

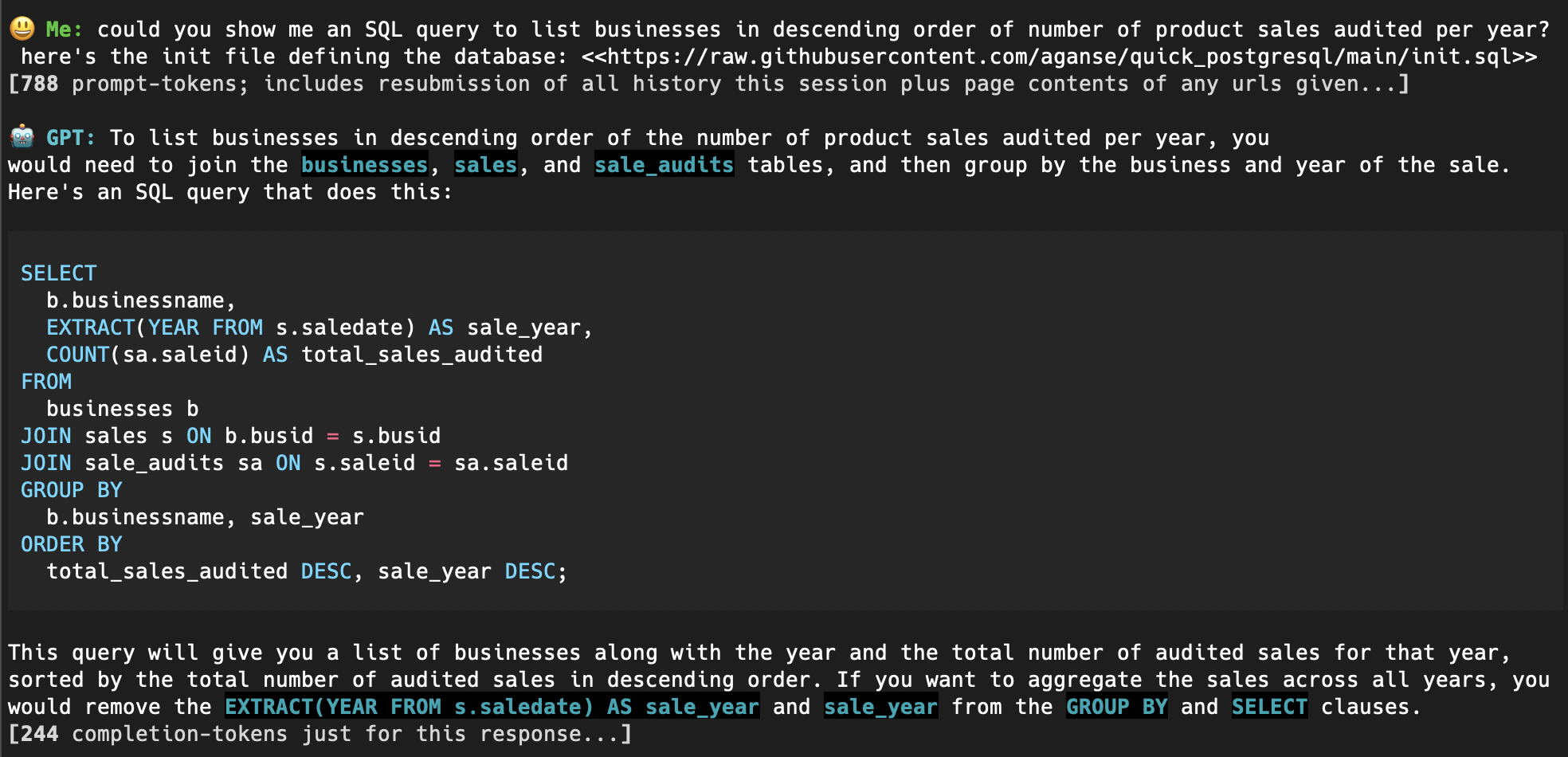

I'm also an avid CLI guy - I often like to spend lots of my time in purely CLI/terminal-shell based workflows. Writing a terminal-based GPT interface has covered this interest well; by running my GPTclient app in one of my tmux windows I can quickly and easily copy/paste responses and example code to my other tmux windows. Using the API also allows me to change some of the model parameters in a way that is defined and that I can understand. And I added a url/webpage-reading feature in my app to cover a desirable functionality the website provides (besides access to GPT4 itself) — a frequent use there is to refer to code in GitHub repos that I'm asking for help fixing or updating. Lastly, of course the process of making the app has also provided highly useful education in understanding how the models work as well, including how interacting with them via API can enable endless use cases from other automated code. The app I wrote here is just a single Python script that is less than a few hundred lines, purposely made with simplicity in mind so that it can be readily understood what it's doing.

Check out the app — it's available in my GitHub aganse/gpt_client repo, where the README describes installation and some parameters to experiment with. Its main version is a command-line based terminal app (tested on Linux and MacOS; have not yet tested on Windows). But it also has a Gradio-based GUI version that can be run on one's local machine which uses all the same backend.

I have found OpenAI's GPT models to be enormously productive tools and I use them often in my technical work now. They sort of fill a comparable role to Google and Wikipedia searches, just at a higher level of abstraction when I have a more general question than is served by a few search strings. As more companies' models come out I imagine I'll make use of those too, but for now it's just OpenAI models I've focused on, in particular its

gpt-turbo-3.5, gpt-4, and gpt-4-1106-preview models, as well as its ChatGPT website based gpt-3.5, gpt-4, DALL-E, and other offerings on the site that have evolved over time.There are currently two key ways of using the OpenAI GPT models — via the paid ChatGPT Plus plan on their website (note the free ChatGPT plan based on gpt 3.5 is really not worth one's time), and via the OpenAI API. There are pros and cons of each. While technically, by now possibly one might be able to implement the various features of the ChatGPT Plus website plan via the API, I haven't yet found an app that does that, and that's a lot of work to implement. If you want to take advantage of the automated saving/organizing of conversations, reading of PDF files (think research papers), running AI generated code in a sandbox, listing url sources for statements the AI makes, and using the phone-based app to talk out loud with the AI, then ChatGPT Plus can be really worth the $20/month subscription. On the other hand, that $20/month does NOT cover use of the API is needed for use in external software tools (e.g. software dev tools), and you can pretty easily make an external tool yourself to interface the AI at a fraction of that $20/month. So it really depends on your use-case. Myself I take advantage of both use-cases, between a ChatGPT Plus account at work (where I often do use its help with PDF papers and sandboxed generated code) as well as via the API for my home/non-work use. By the way, for work, both use-cases do have options for keeping work data private — just note the $20/month plan does NOT provide that (check out their Team plan).

I'm also an avid CLI guy - I often like to spend lots of my time in purely CLI/terminal-shell based workflows. Writing a terminal-based GPT interface has covered this interest well; by running my GPTclient app in one of my tmux windows I can quickly and easily copy/paste responses and example code to my other tmux windows. Using the API also allows me to change some of the model parameters in a way that is defined and that I can understand. And I added a url/webpage-reading feature in my app to cover a desirable functionality the website provides (besides access to GPT4 itself) — a frequent use there is to refer to code in GitHub repos that I'm asking for help fixing or updating. Lastly, of course the process of making the app has also provided highly useful education in understanding how the models work as well, including how interacting with them via API can enable endless use cases from other automated code. The app I wrote here is just a single Python script that is less than a few hundred lines, purposely made with simplicity in mind so that it can be readily understood what it's doing.

Check out the app — it's available in my GitHub aganse/gpt_client repo, where the README describes installation and some parameters to experiment with. Its main version is a command-line based terminal app (tested on Linux and MacOS; have not yet tested on Windows). But it also has a Gradio-based GUI version that can be run on one's local machine which uses all the same backend.